Learn a Language in 6 Months

Speech Genie

your AI Language Conversation Partner

Learn a Language in 6 Months

Speech Genie

your AI Language Conversation Partner

What is Speech Genie?

Speech Genie is a completely new generation of language learning technology that we are now building.

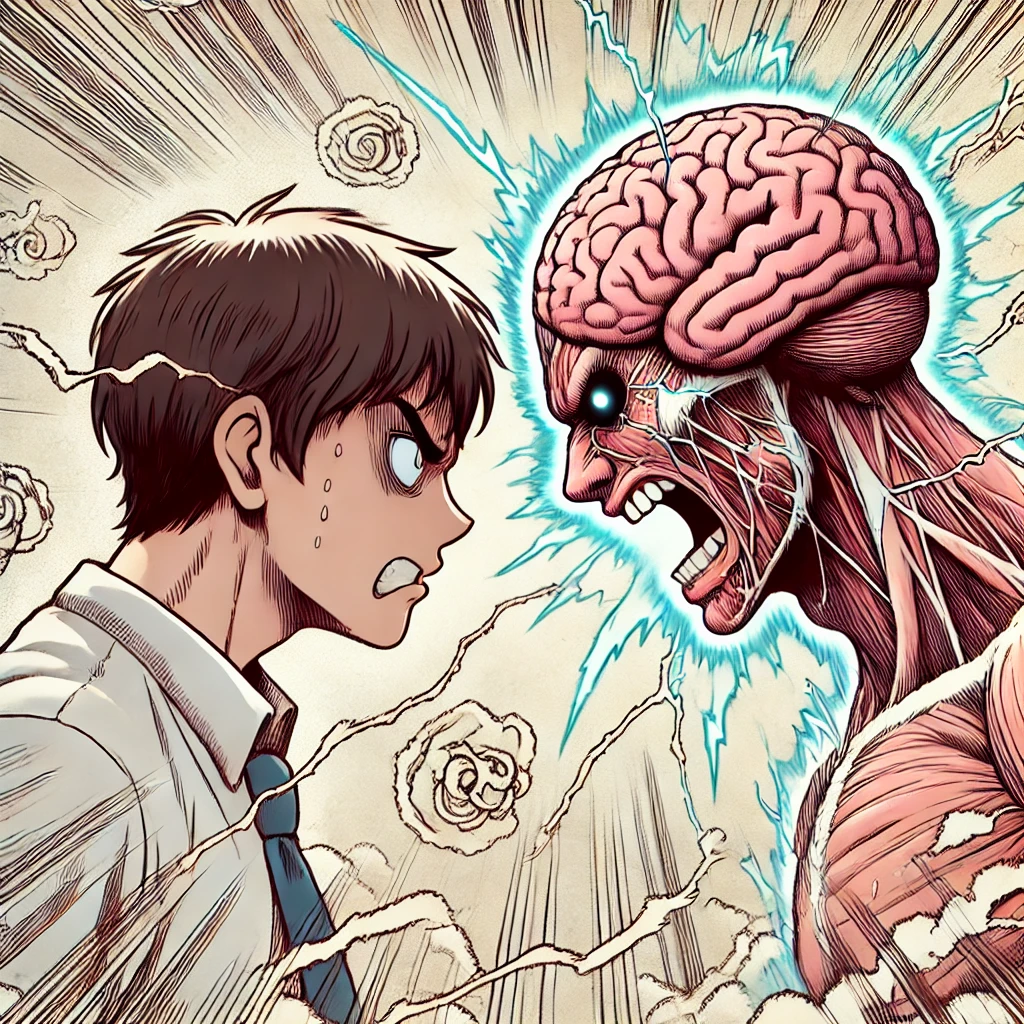

KUNGFU ENGLISH

takes the best of Chris Lonsdale’s “Kungfu English” system (for Chinese people to learn English)

BRAIN SCIENCE

and combines it with John Ball’s natural language AI that is fully based on Brain Science!

This sets a completely new standard for second language learning!

The result is a perfect interactive computer-based self-learning system for mastering a second language !

Learners at any level of competence

Can engage in a fully immersive learning environment. An environment that your brain thrives on!

Reproducing Developmental Stages

No other major language learning platform in the world uses this approach.

The Speech Genie system, currently under development, will combine leading-edge brain-based learning technologies with a Language “bot” that understands the MEANING that you are communicating – and then does what you want by following your commands!

You will be communicating with this digital avatar in the same way you would speak with a trusted friend or “language parent”.

AI DIGITAL AVATAR

aka “Language Parent”

A core of the system will be the speech “genie” – a digital avatar that can understand what you as a learner say, and then follow your commands! This allows for you to talk with your system as if it were a real human, giving you a stress-free, fun, immersive and highly effective learning experience.

Stop fighting your Brain!

Work With IT!

Have you ever wondered why so few people in the world actually master a second language? If all the school classes, university classes, online classes or other learning systems to teach languages actually worked effectively, then pretty much everyone in the world should be multi-lingual. This – clearly – is not the case. True – some people speak a few languages well. But this is rare. And, those that do speak several languages well have mostly learned them OUTSIDE formal educational contexts.

Why Current Language Teaching “fails”

So, what’s going on?

The answer is simple! The way languages are taught in the world today almost universally flies in the face of the way in which the brain actually acquires language! Despite all that we know about the brain, and how people acquire language, learning systems available today fail to incorporate this knowledge into their design!

Most language courses cause learners to fight against what their brain can do naturally, therefore guaranteeing slow learning or complete failure!

How Does the Brain Learn Language?

Pattern Recognition

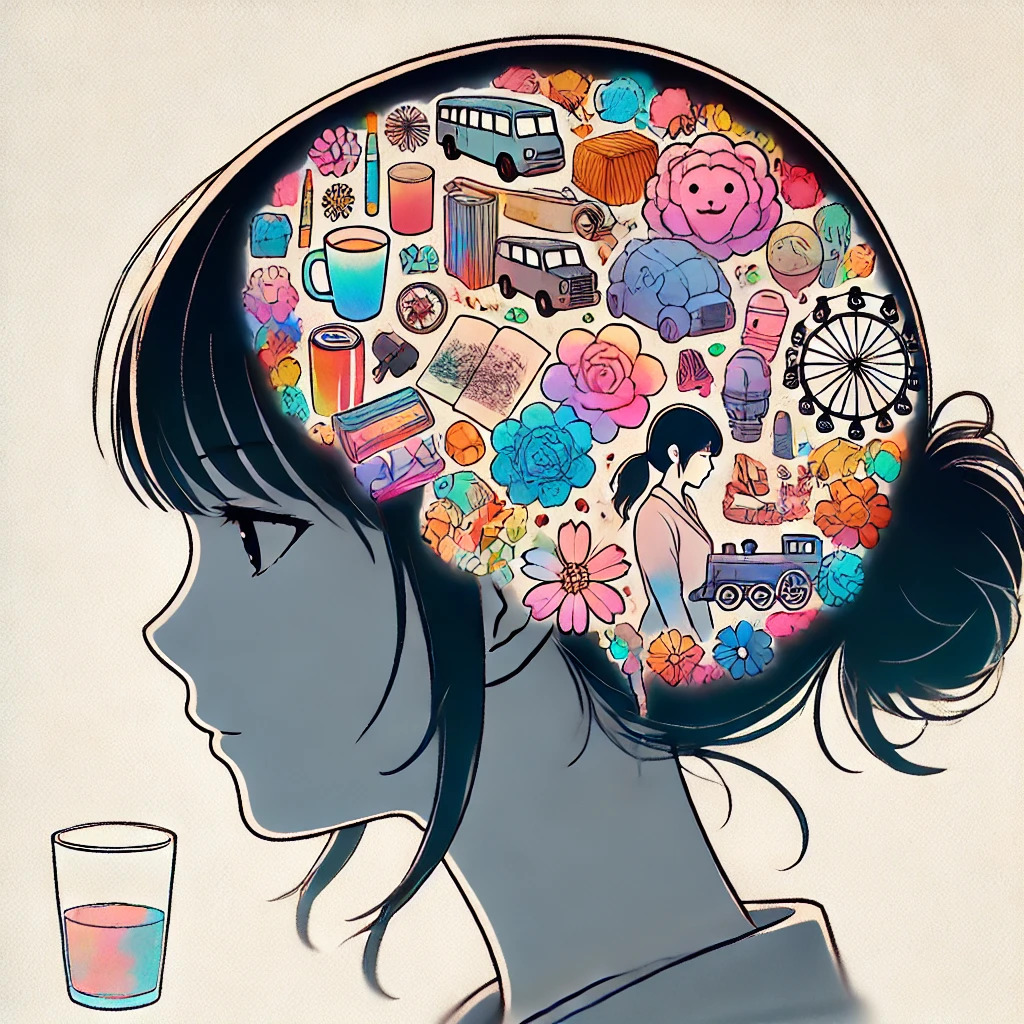

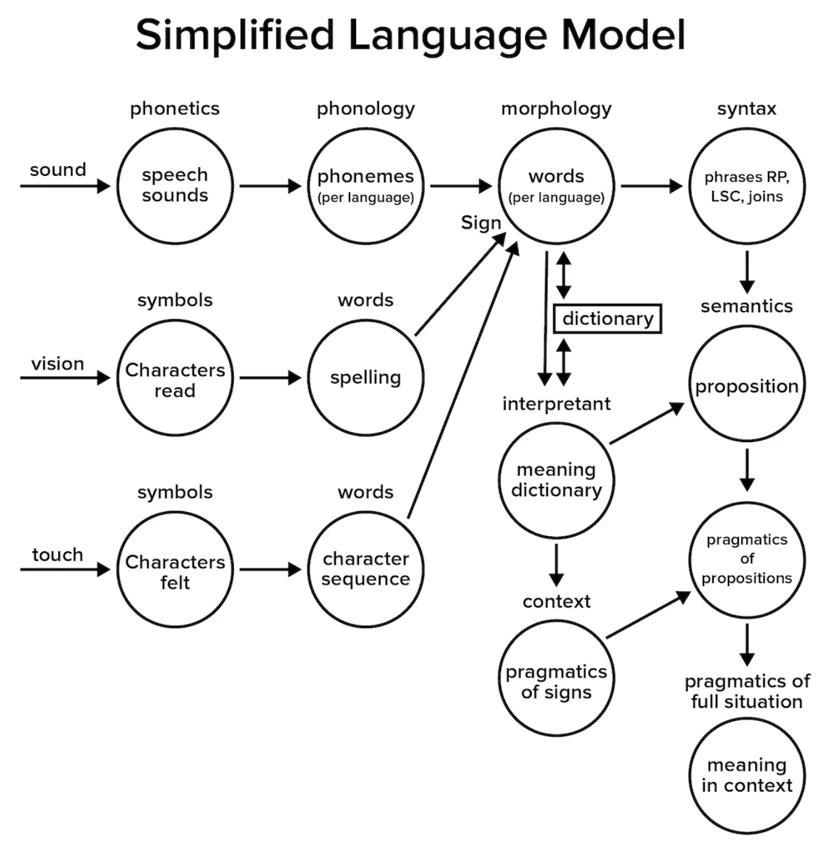

It recognises patterns (in sound, mostly) and connects those patterns to meaning! Meaning is made up of the internal representations that people have in their minds about the world – including objects; actions; aspects of objects (size; shape; colour; etc); relationships etc. When patterns repeat enough times, in various contexts where meaning is clear, the brain stores the patterns for later use!

It REALLY is THAT SIMPLE.

Allow People to Experience Patterns

With the Kungfu English learning system

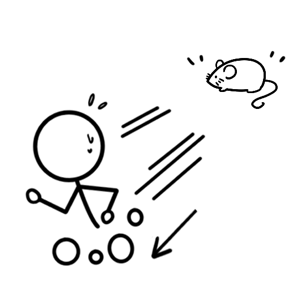

With Speech Genie we take this work to a new level, so that learners can engage in two-way interaction with a digital avatar that understands what they say – thereby closing the loop! Learners engage in ways that first create recognition memory. THEN – with that recognition memory in place – learners extend themselves to production memory (recall memory) and speak to a language partner.

No Embarrassment, No Stress

Because the language partner in Speech Genie is not a flesh-and-blood human, issues of embarrassment and fear of speaking also do not occur, which is a KEY element required in the successful acquisition of a new language.

Through this process you learn to connect

the words that you hear to meaning, the same way you do with your mother tongue.

And, step-by-step, you learn to connect words together into sequences to make yourself fully understood at deeper and deeper levels.

AI and LANGUAGE

AI-based Language Interaction That You Can Count On ?

If you have used systems like Grok or ChatGPT at all you will have been both very amazed, and simultaneously extremely frustrated. Why ?

Your amazement probably comes from the speed at which it pulls together information into a usable form. For instance, a prompt from you aiming to get an overview of a topic from research on the internet can create a credible AI generated report in about 45 seconds. To do the same research yourself would take many hours

LLM – Large Language Model

GPT Hallucinations

At the same time, the research delivered is often in error and the systems even “hallucinate” answers to the questions that you pose. These problems all arise from the fact that none of the Large Language Model – LLM – based systems actually understand meaning from language like humans do. They simply do statistics on word frequency and colocation!

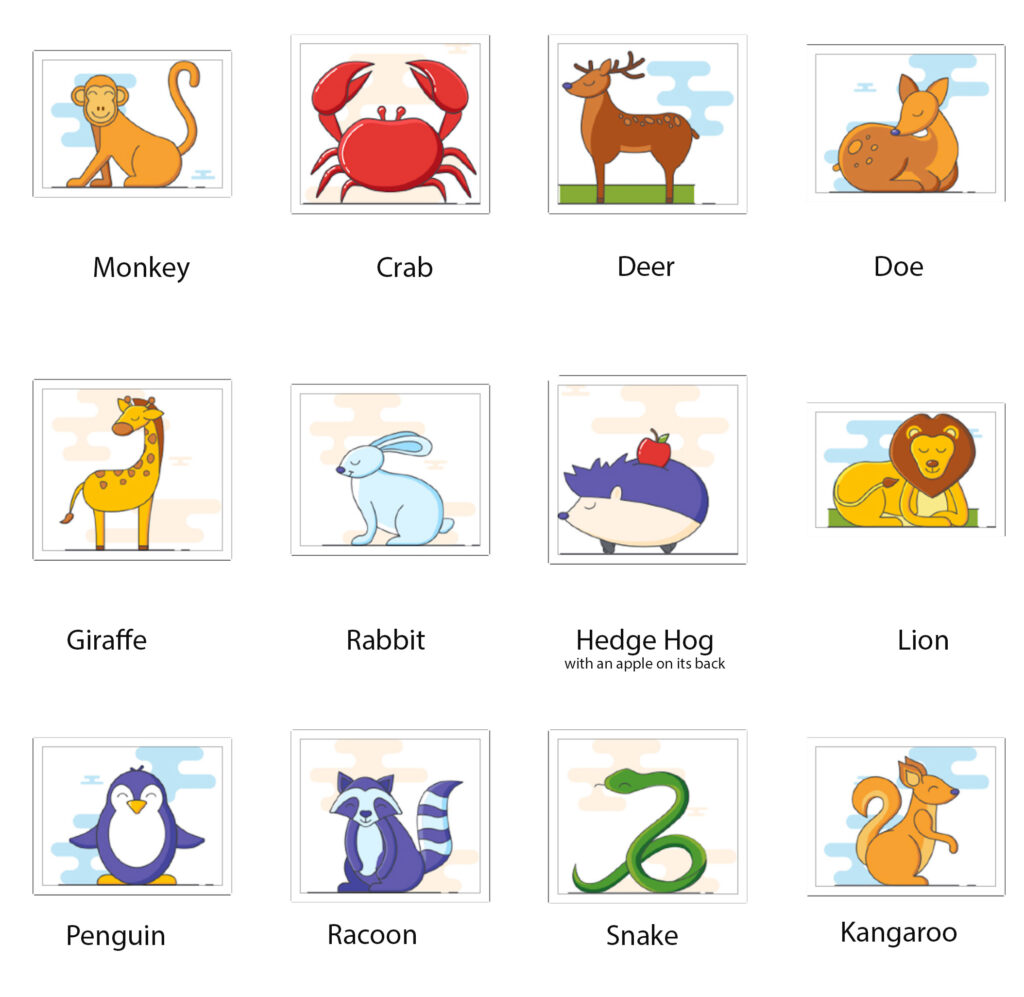

ChatGPT DALL-E

Please make an cartoon image grid of the following animals with a label under each one with what it is: Monkey Crab Deer Doe Giraffe Rabbit Hedgehog (with an apple on its back) Lion Penguin Raccoon Snake Squirrel Kangaroo.

Grok 3

Please make an cartoon image grid (no background) of the following animals with a label under each one with what it is: Monkey Crab Deer Doe Giraffe Rabbit Hedgehog (with an apple on its back) Lion Penguin Raccoon Snake Squirrel Kangaroo.

What to do? It’s simple, really.

Many years ago John Ball came to the conclusion that for computers to work with and understand language we should try to model how the human brain understands language.

Afterall, the human brain – which weighs less than three and a half pounds and runs on only 12 watts of energy – can understand everything communicated in a language, AS LONG AS IT HAS ALREADY CONNECTED WORDS TO MEANING THROUGH PATTERN RECOGNITION AT SOME POINT IN TIME.

John’s Patom theory

John’s Patom theory of how the brain understands language by means of pattern recognition and interconnected “meaning sets” can be directly applied to develop digital systems that fully understand meaning from language!

In other words – it creates digital language understanding systems that you can count on.

This is the basis for John’s Pat system which allows digital machines to use human language by converting speech or text into meaning. Computer algorithms can work with data when that data embodies meaning.

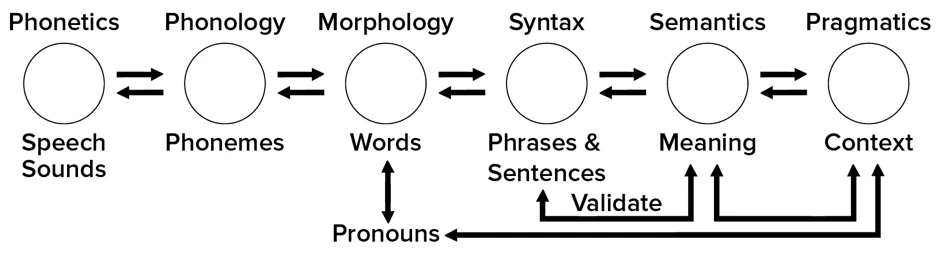

It’s important to understand that language recognition is by no means trivial.

There are infinitely many sentences in a language that can contain one or more ‘errors.’ For example, what does the following sentence mean?

it mean’s “Can you pick up the cup?”

n spoken language this type of speech error is common. And, for learners of a new language, there are many other types of language error that need to be managed by a linguistically based digital system. The Pat system is engineered based on fundamental linguistic science which allows it to solve problems such as this. There are many such problems that only Pat can solve.

This solutions allow us to create a digital language partner (or “language parent” as we like to say), which can support a learner to master the core of a new language in as little as six months.

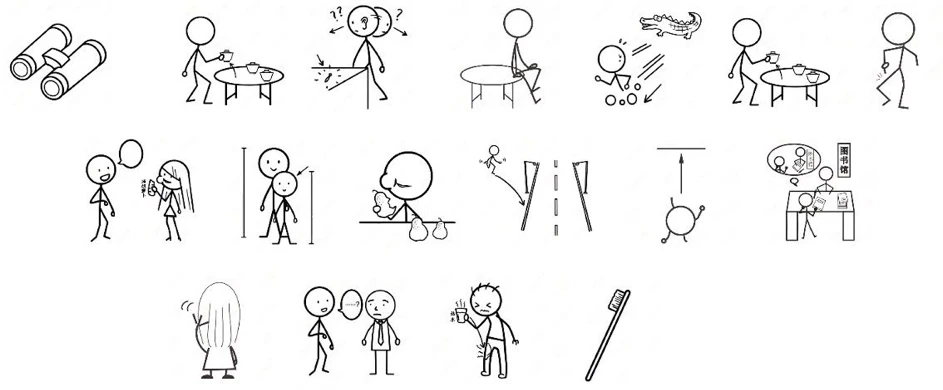

Basis for an Accurate Interactive Learning System

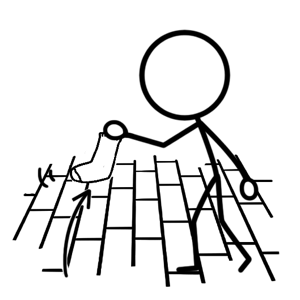

The conversion of language to its meaning allows a developer to decide what to do even in the face of multiple types of error. In the above example,

“Pick up the cup, no glass”

gives the meaning

[do’(you, 0)] CAUSE [raise’(the glass)]

Given a command to make the glass raise, the algorithm therein developed can show a glass being picked up or select it from a series of images. This allows us to build a fully interactive system wherein the learner can use language to command the software and be delighted when the system responds by acting based on full understanding!

Speech Genie will be developed on this basis.

Add a compelling title for your section to engage your audience.

With the Speech Genie project we are beginning by integrating important parts of the Kungfu English system with the work of John Ball, so that learners will be able to speak to their device and get a meaningful (and accurate) response.

Initially learners will be manipulating materials and symbols in a two-dimensional screen space. They will respond with actions to commands and statements from their speech avatar, and they will use their speech to command their avatar to complete tasks that require the language elements which they are learning.

This, of course, is just a start. As the language range of the digital avatar grows over time more and more complex actions and interactions will be possible. Within a few years we will be able to immerse learners into 3D virtual environments using VR technologies that are becoming more powerful by the day.

Star-Trek-like “holodecks” where learners have full physical immersion while interacting with their Speech Genie avata

The brains behind the Genie

Speech Genie is the result of a collaboration between two leading experts in language learning and AI.

Chris Lonsdale

A psychologist, linguist, and educator, Chris Lonsdale was educated in New Zealand and moved to China in 1981.

In 2010, he created the Kungfu English learning system, which uses phrases, not individual words, and emphasizes relevance, immersion, comprehension, and practical application. The incorporation of a conversational language partner or “parent” results in a unique learning experience that can take Chinese learners of English from a zero base to effective communication in six months. Chris’s book, The Third Ear, leads readers step by step to think about language learning in new ways. His TEDx talk about second language learning has been viewed close to 100 million times.

John Ball

The founder and CTO of Language Parent (Inc), home of his Patom natural language understanding (NLU) theory,

John Ball is a leading cognitive scientist, expert in machine intelligence and computer architecture.

His revolutionary approach provides a way for computers to break down any human language using patterns and sequences, the meaning-based way the human brain approaches language. This enables true conversational communication between people and machines. John has demonstrated his theory across nine languages. His book, How to Solve AI with Our Brain, published in November 2024, gives a background in today’s Generative AI models and discusses the remarkable advances ahead with machines based on brain science.